By Scott Becker

May 19, 2025

By Scott Becker

May 19, 2025

AI Agents, Workflow, and LLM Productivity in 2025

From the Code & Cognition Podcast by Olio Apps

In a recent episode of Code & Cognition, the Olio Apps team sat down to talk shop on the evolving world of Large Language Models (LLMs), agentic workflows, and how these tools are changing how we design, scope, and implement software projects in 2025.

Here's a quick recap of key insights from that conversation, plus takeaways for developers and teams experimenting with generative AI app development.

Tools like Amazon Bedrock and Anthropic’s AI agent frameworks are pushing this forward, enabling developers to define tools, provide schema access, and let LLMs decide how to achieve outcomes—like dynamic project status reports pulled from multiple sources.

Tools like Amazon Bedrock and Anthropic’s AI agent frameworks are pushing this forward, enabling developers to define tools, provide schema access, and let LLMs decide how to achieve outcomes—like dynamic project status reports pulled from multiple sources.

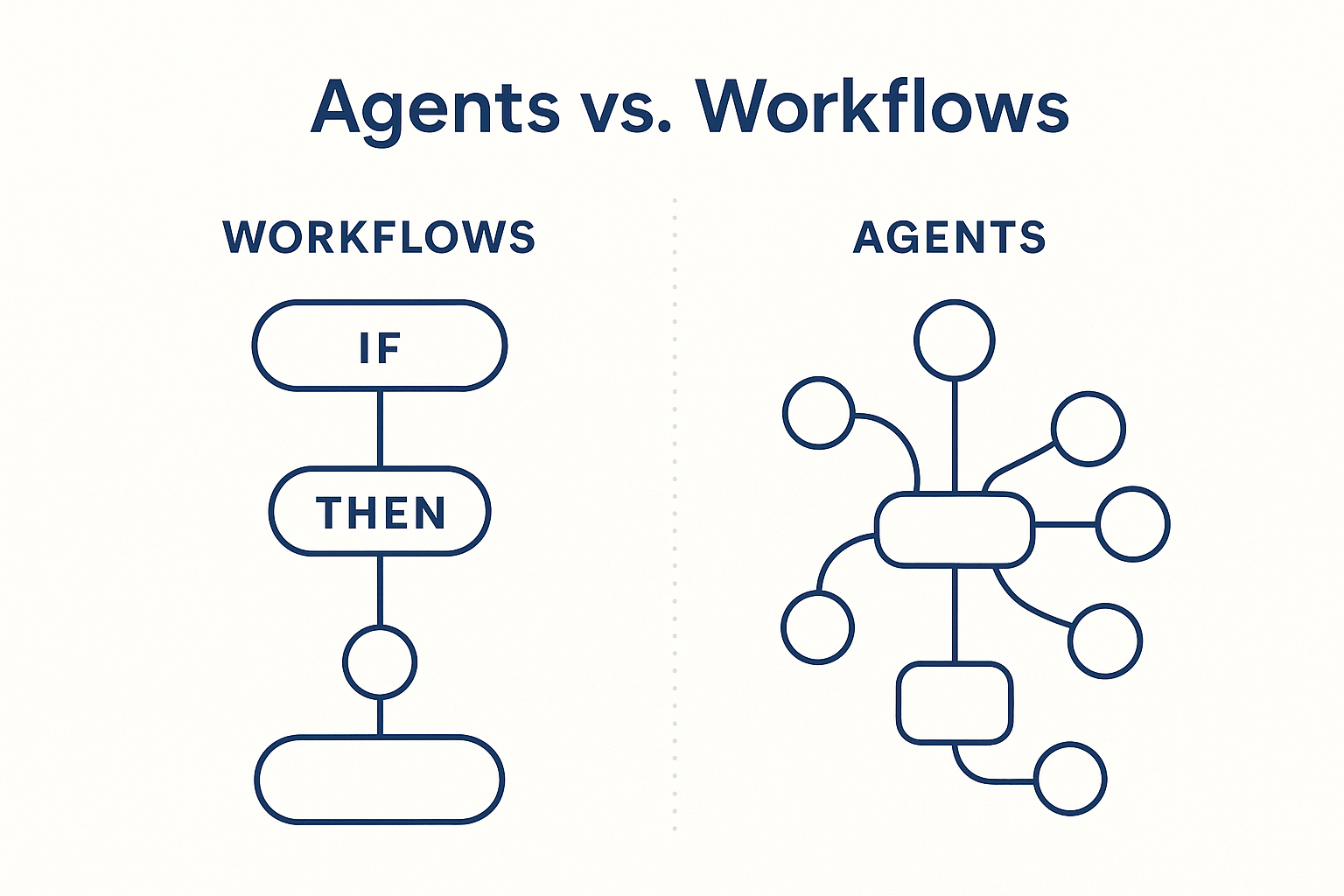

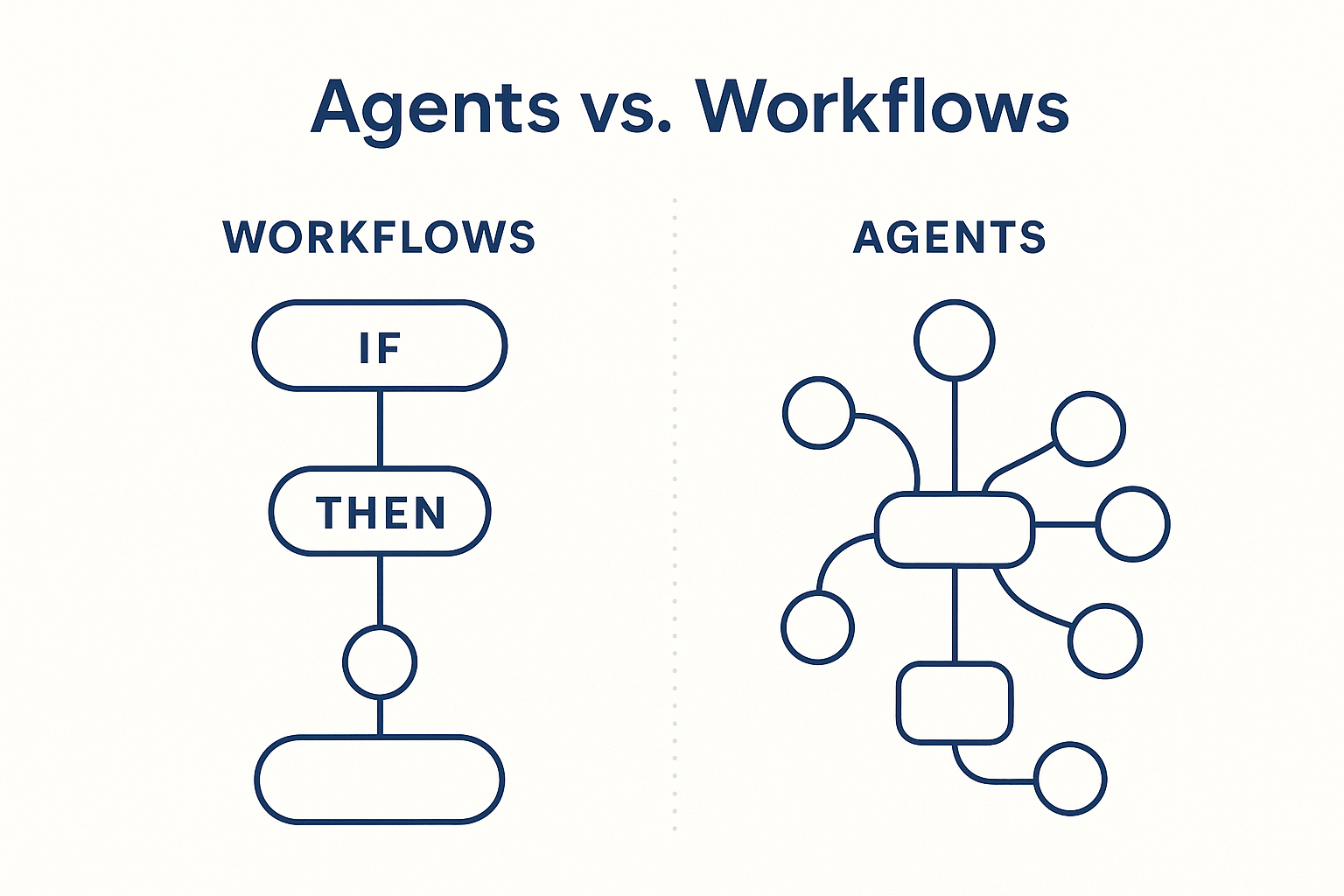

Agents vs. Workflows: What’s the Difference?

There’s a lot of buzz around “AI agents,” but what distinguishes them from traditional LLM workflows?- Workflows: Think of these as on-the-rails sequences. They follow predefined steps like “if this, then that” logic.

- Agents: More autonomous. They dynamically decide what steps to take and how to use tools, making decisions in real time. This allows for more flexible, adaptive systems.

Real-Time Evaluation & Human-in-the-Loop

One emerging pattern we are interested in exploring more: LLM as judge. This is where a more expensive model is used to evaluate output from a cheaper one. This cost-effective technique can be used live within systems, not just during prompt engineering. For example:- A cheaper model drafts responses.

- A more advanced LLM evaluates output against success criteria.

- Feedback is looped back until the result meets expectations.

Cursor, Codegen, and Managing Tech Debt

Cursor has become a favorite among the Olio team for AI-assisted programming. Used well, it has boosted developer velocity by at least 40% for our teams. But with great speed comes great responsibility! Tips to maximize productivity while minimizing tech debt:- System Prompts & Cursor Rules: Use project-specific guidance files to maintain consistent output and enforce patterns.

- Refactor Often: Don’t just copy/paste generated code. Commit to reviewing and improving your code base according to your existing processes.

- Name @mentioning: Reference existing code and structures when asking Cursor to generate similar components. Giving Cursor this context is key for optimal outputs.

What’s Next in Q2?

As we move deeper in Q2, our team is eagar to explore:- Agentic workflows using Bedrock’s orchestration tools

- Cross-source LLM synthesis: merging data from time trackers, Slack, and GitHub

- Natural language end-to-end testing powered by LLMs + Playwright

- More human-in-the-loop flows for approvals, refinements, and efficient collaboration between team members and projects

Final LLM Dev Tip: Don't Break the Illusion

System prompts matter In testing, Claude consistently “stayed in character” while GPT-4 often broke with minimal pressure. If your app’s identity relies on LLM behavior, run forensic checks and ensure your system prompt is structured, clear, and hard to break. You may find some LLMs have an easier time holding on to your system prompt. It may be more advantageious to stick with those LLMs going forward!Final Thought

Whether you’re just starting with LLMs or deep in building intelligent systems, the landscape is shifting fast. Stay agile, experiment, and don’t forget: great AI starts with great context. Listen to the full conversation on the Code & Cognition Podcast, and follow along as we continue building the future of AI-powered software.Resources Mentioned

Scott Becker

CEO

A Tampa, Florida native, Scott relocated to the Pacific Northwest in 2008 and founded Olio Apps in 2012. When not hanging out with his family, biking, or playing in his punk rock band, Scott helps define the scope of customer engagements and oversees the business administration of Olio Apps. Scott’s areas of specialty are in DevOps, product design, technical design, and full stack engineering in React, Golang, and Ruby.

Share This Post

Popular Articles

Using AI To Create Website Color Themes

Emma Gerigscott

Facilitating Event Driven Architecture Evolution Using Amazon EventBridge

Dustin Herboldshimer