By Josh Proto

Jan 25, 2026

By Josh Proto

Jan 25, 2026

Breakin' the Louvre: Notes From a One-Day Multiplayer Hackathon With AI in the Loop

At a recent Olio Apps hackathon, teams were given a clear assignment: build a multiplayer game in one day, using a generative AI code assistant that was both fun and complete!

Importantly, the multiplayer aspect of the game couldn't be optional and gen-AI code assistants could be utilized however the team saw fit as long as whatever existed at the end of the day could run, support multiple connected players, and be demo-ready by the afternoon.

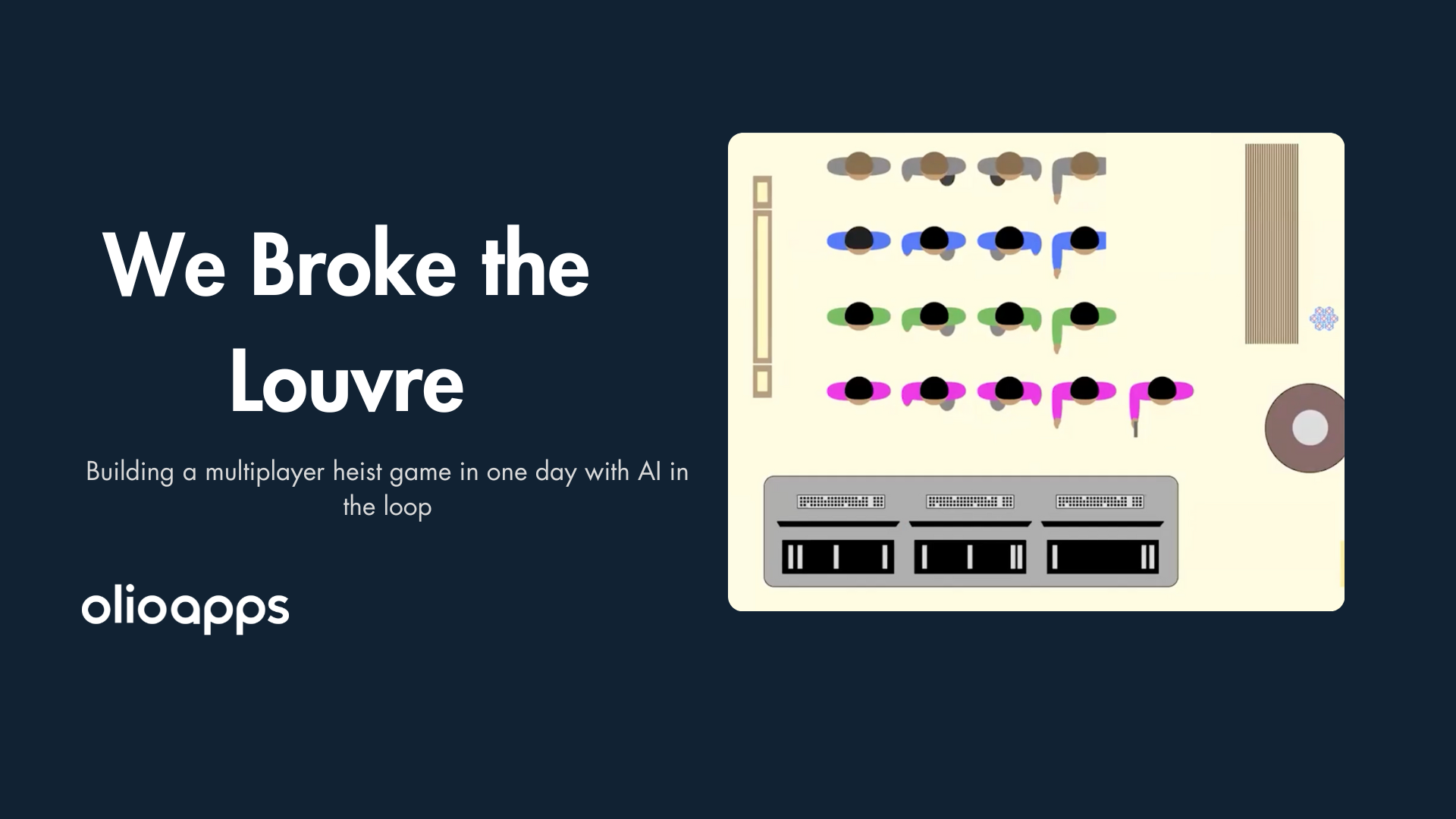

One team's response to that prompt became Breakin' the Louvre, a three-player top-down heist game inspired by old-school arcade crime games and a slightly absurd real-world news story. Players navigate the ground floor of the Louvre museum and together avoid guards, locate objectives, disable security systems, and escape as a group, which lead to some engaging gameplay.

What makes the project worth examining isn't just the novelty of a hackathon game, but what the team revealed regarding their process around building real software when a generative AI tool is not just present but required, and when time pressure leaves very little room for mistakes. The team had to especially find ways to accelerate their development while making sure their AI tools didn't go off the rails!

The Hackathon Provided Real Constraints

The shape of Breakin' the Louvre was dictated almost entirely by the hackathon format. Teams were expected to produce a multiplayer game in a single workday and you can't fake a multiplayer game. The game needed shared state, real-time communication, and some kind of backend coordination. Even a simple multiplayer game forces you to solve problems that single-player prototypes can usually ignore. On top of that, the use of a generative AI code assistant wasn't optional. The intent wasn't to sprinkle AI on top of an otherwise traditional workflow, as the expectation was that AI would meaningfully participate in development as defined by each individual team. Although they came up with the concept a week prior, they didn't initialize the repo until the morning of the hackathon and even began creating game assets on the bus ride over to the hackathon location! Given that setup, the team made a pragmatic decision early involving their use of their Gen AI code assistant Claude: instead of debating frameworks or manually scaffolding infrastructure, they would describe the game and constraints to Claude and let it recommend a stack it could realistically implement in the time available.Letting the AI Choose Tools It Could Actually Use

The AI suggested a browser-based stack built around Phaser for the game layer and Colyseus for real-time synchronization. None of the developers were deep into game development day-to-day, and no one had to crack open documentation during the hackathon because of Claude's implementation. The team didn't have to deliberate about if these were the best tools, as they trusted Claude had the capacity to know what tools it could integrate with. And this bet paid off. Within the first couple of hours, the team had a functional multiplayer prototype. Players could connect to the same session, move around the map, and see each other in real time. At that point, the project already satisfied the core requirement of the hackathon. From there, the rest of the day was about layering in behavior rather than establishing foundations.From Prototype to Game

Once the multiplayer spine was in place, the team focused on turning the prototype into an actual game. The core loop was straightforward. Three players spawn into the same space. Guards patrol the map. Running into a guard gets you caught. To escape, players needed to find a sticky note with a password, locate the crown, access the security control room, wipe the footage, and reach the exit together. Each objective required coordination, and the game only worked optimally if all players stayed alive long enough to complete it. Sound design was added late in the day, using AI-generated music inspired by 1970s crime films. It turned out to be one of the biggest perception upgrades and everyone who played it found the music engaging. Now, the game felt more finished the moment sound entered the picture, even though the underlying mechanics hadn't changed. By mid-afternoon, Breakin' the Louvre had a beginning, middle, and end. Although it wasn't fully polished and the team wanted to add in some additional components it was coherent, complete, and fulfilled the criteria for the hackathon.Debugging When You Didn't Write the Code

As the game grew more complex, bugs appeared in predictable places: collision detection, projectile behavior, sprite orientation, and general edge-case weirdness. What was different was how debugging worked. Most of the code had been written by a generative AI tool, in this case with the help of Claude Code, which meant traditional debugging habits didn't always apply. There was no stepping through code line-by-line or setting breakpoints to inspect state. Instead, when instructed to assist with debugging, the AI relied heavily on logging, running the server, interpreting log output, and iterating changes from there. In many cases, this worked surprisingly well and served as a novel debugging paradigm the team would not have considered otherwise. Claude could add logs, run the application, read the output, and adjust its approach faster than a human would in the same loop. Though in other cases, it struggled in familiar ways. Seemingly simple requests like "move the bullet slightly to the right" produced unexpected results. Visual intent didn't translate cleanly into code changes. The AI lacked the shared intuition humans have about spatial relationships in games and how players expect shooting mechanics to look and feel. Overall, moments like this were instructive. Claude was seen as most effective when success criteria are made explicit and given machine-readable outputs. It's much less reliable when problems depend on subjective judgment about how something should look or feel, even if it's conventionally expected in a game.Collaboration Looked Different Than Usual

One unexpected side effect of the AI-heavy workflow was how it changed team dynamics. While the AI processed requests, sometimes for non-trivial stretches, the team didn't have to code. Instead, they discussed priorities, adjusted scope, and decided what could be cut if time ran out alongside conversations of architecture and design optimization. In a traditional hackathon, that kind of conversation often gets crowded out by frantic implementation. Here, it was extended into the rhythm of the day. Waiting for the AI created natural pauses that made room for higher-level deliberation.Deployment Wasn't Pretty, But It Worked

Deployment happened late in the day using Railway. Under the hood, the setup resembled several EC2 instances handling the multiplayer backend and the frontend. Though there were initially some issues. Claude wanted these workloads to exist as two different deployment services but the team opted to unite everything in Railway after some negotiating with Claude. The different services didn't talk to each other cleanly at first. At one point, the AI kept breaking the same part of the deployment configuration and had to be explicitly told to stop touching it. Still, the game ended up deployable in a way that was simplified in design and for execution under the hackathon.Knowing When Not to Use AI Still Mattered

The team was clear about one thing: using AI everywhere isn't automatically faster. Some tasks would have been quicker to do manually. Some problems took longer with AI than they would have with direct intervention. In a few cases, the AI entered loops that required a human to reset direction. There were several instances where optimizing SVGs and particle collision animations ended up being simple fixes but proved to be heavy time sinks for Claude Code to fix. This was an important reality check for the team and a reminder that they still needed to not just be in the driver seat but provide a steady hand to guide their AI teammate!What This Hackathon Actually Shows

Breakin' the Louvre demonstrates the power of AI code assistants like Claude Code when they are in the hands of an effective team that can design systems up front, delegate components or even whole features for the AI to develop, and know when to step in, steer, and clean up the work of their AI teammate. In turn, the ability for the team to prioritize higher level tasks like application architecture and game design were an unexpected benefit from the use of Claude Code and ultimately allowed them to ship Breakin' the Louvre given the time and design constraints. A key point is that this project didn't work because AI is magic. It shipped because they made an appropriate scope, made pragmatic decisions, and calculated risks regarding the capability of their Claude Code powered teammate, knowing it could extend their capability but would be an imperfect collaborator.

Josh Proto

Cloud Strategist

Josh is a Cloud Strategist passionate about helping engineers and business leaders navigate how emerging technologies like AI can be skillfully used in their organizations. In his free time you'll find him rescuing pigeons with his non-profit or singing Hindustani & Nepali Classical Music.

Share This Post

Popular Articles

Using AI To Create Website Color Themes

Emma Gerigscott

Facilitating Event Driven Architecture Evolution Using Amazon EventBridge

Dustin Herboldshimer