By Scott Becker

Apr 30, 2025

By Scott Becker

Apr 30, 2025

How We Solved AWS Lambda Scalaing with Auto Scaling and Provisioned Concurrency

When your application grows quickly, so do the demands on your infrastructure. AWS Lambda offers automatic scaling by design, but without the right configuration, it can lead to cold start latency, manual overhead, and escalating costs. As part of our Fractional CTO Service, we’ve worked with clients to address challenges like cold start latency and scaling in AWS Lambda, so we wanted to highlight an example where we were able to successfully implement Lambda auto scaling.

In this guide, we’ll walk through how our team tackled these challenges by implementing Lambda auto scaling with provisioned concurrency—a move that significantly improved both performance and cost-efficiency.

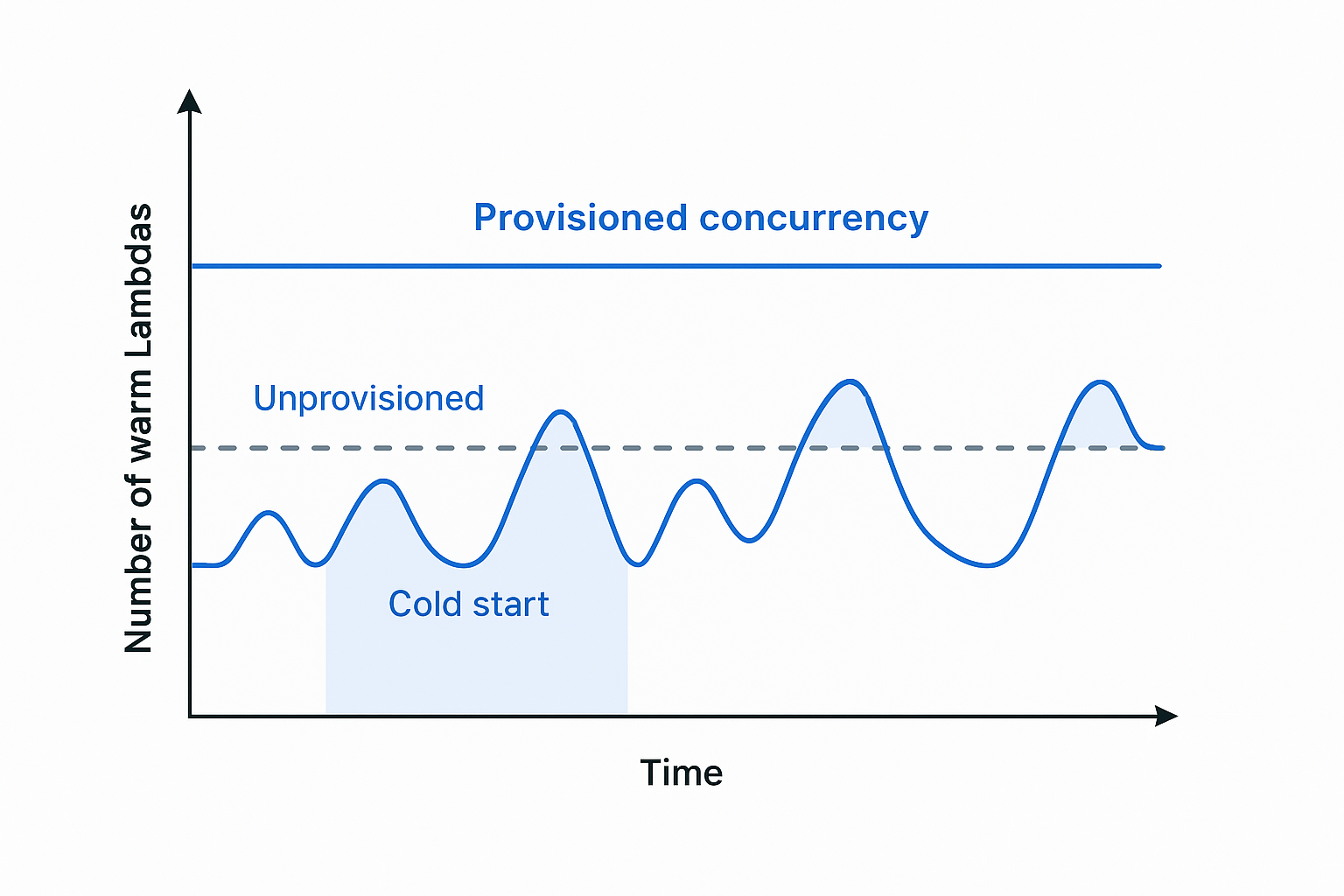

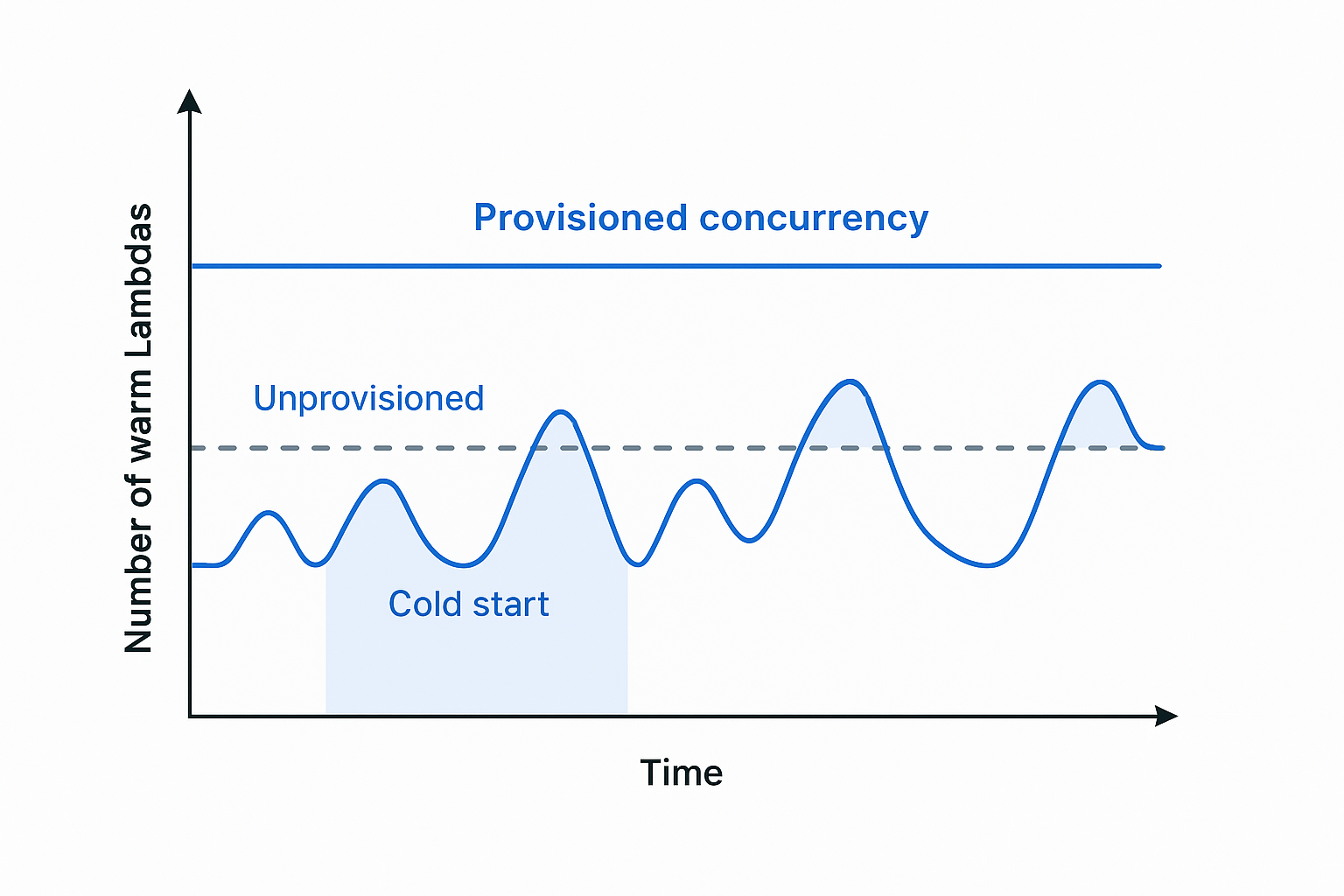

That’s where AWS Application Auto Scaling comes in. It dynamically adjusts provisioned concurrency based on real-time metrics—scaling up during traffic spikes, and scaling down when demand slows. This gives us the performance benefits of warm Lambdas without the cost of over-provisioning.

That’s where AWS Application Auto Scaling comes in. It dynamically adjusts provisioned concurrency based on real-time metrics—scaling up during traffic spikes, and scaling down when demand slows. This gives us the performance benefits of warm Lambdas without the cost of over-provisioning.

The Problem with AWS Lambda Cold Starts and Manual Scaling

Our client’s application was built using AWS Lambda, but their serverless architecture had a major bottleneck: As more users joined, Lambda functions experienced cold starts, leading to sluggish response times. To compensate, we manually increased provisioned concurrency before major events. This fixed performance—but introduced human error and high costs when concurrency was left high after traffic dropped.Understanding Lambda Auto Scaling with Provisioned Concurrency

A common misconception is that AWS Lambda always scales instantly. While it can spin up new instances as requests come in, each new instance introduces cold start latency. Provisioned concurrency solves this by keeping a set number of Lambda instances warm and ready. But provisioning too many functions 24/7 can be expensive. That’s where AWS Application Auto Scaling comes in. It dynamically adjusts provisioned concurrency based on real-time metrics—scaling up during traffic spikes, and scaling down when demand slows. This gives us the performance benefits of warm Lambdas without the cost of over-provisioning.

That’s where AWS Application Auto Scaling comes in. It dynamically adjusts provisioned concurrency based on real-time metrics—scaling up during traffic spikes, and scaling down when demand slows. This gives us the performance benefits of warm Lambdas without the cost of over-provisioning.

Real-World Lambda Scaling: Our Configuration in Practice

Here’s how we implemented it using the Serverless Framework:- Provisioned concurrency is configured for high-traffic endpoints.

- CloudWatch alarms monitor usage and trigger scaling actions.

- We maintain a buffer by targeting 70% utilization—this keeps 30% of provisioned Lambdas in reserve for surges.

- Scaling thresholds are evaluated over a 3-minute trailing window to smooth out short-term spikes.

Key Benefits of Lambda Auto Scaling

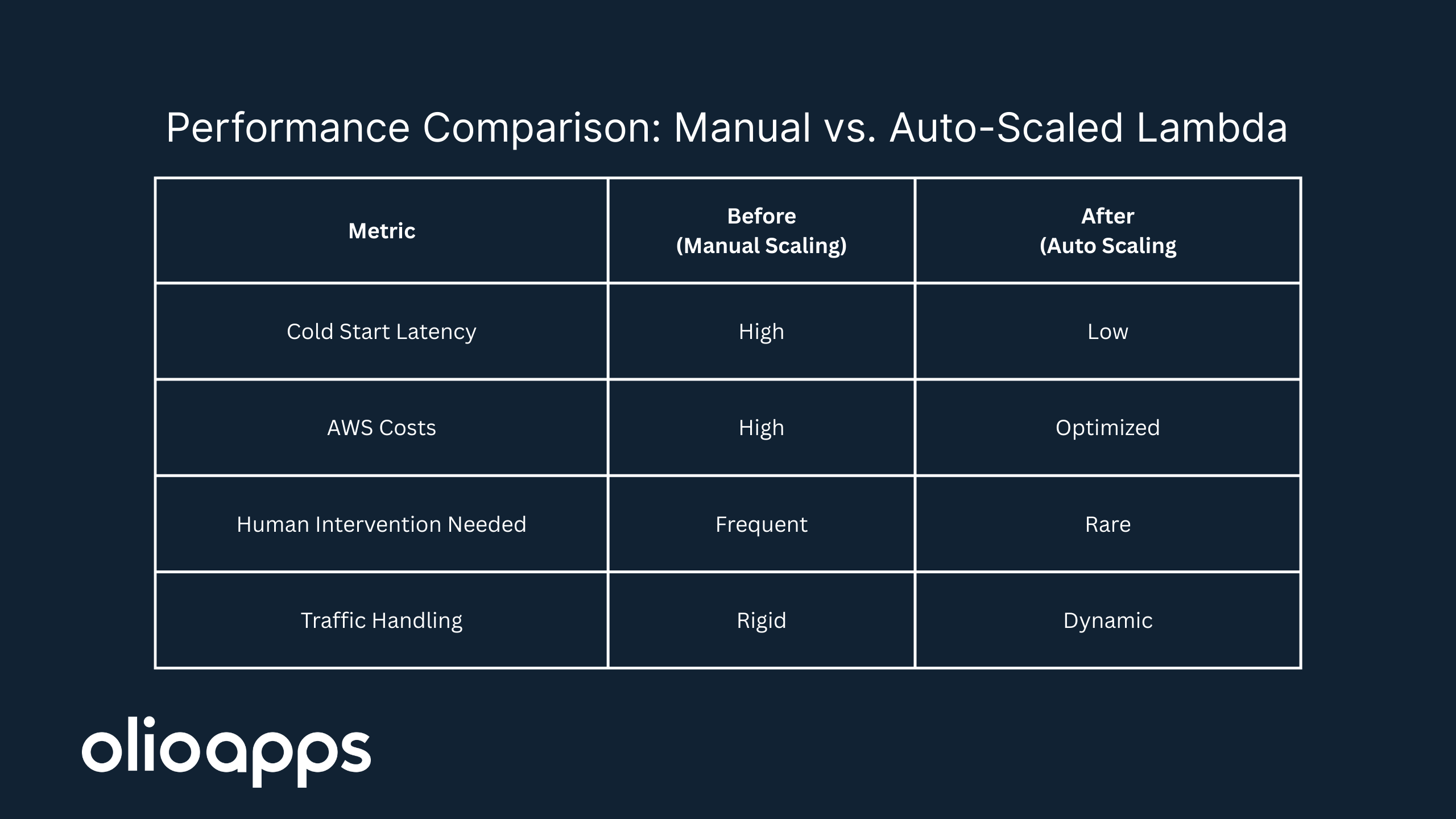

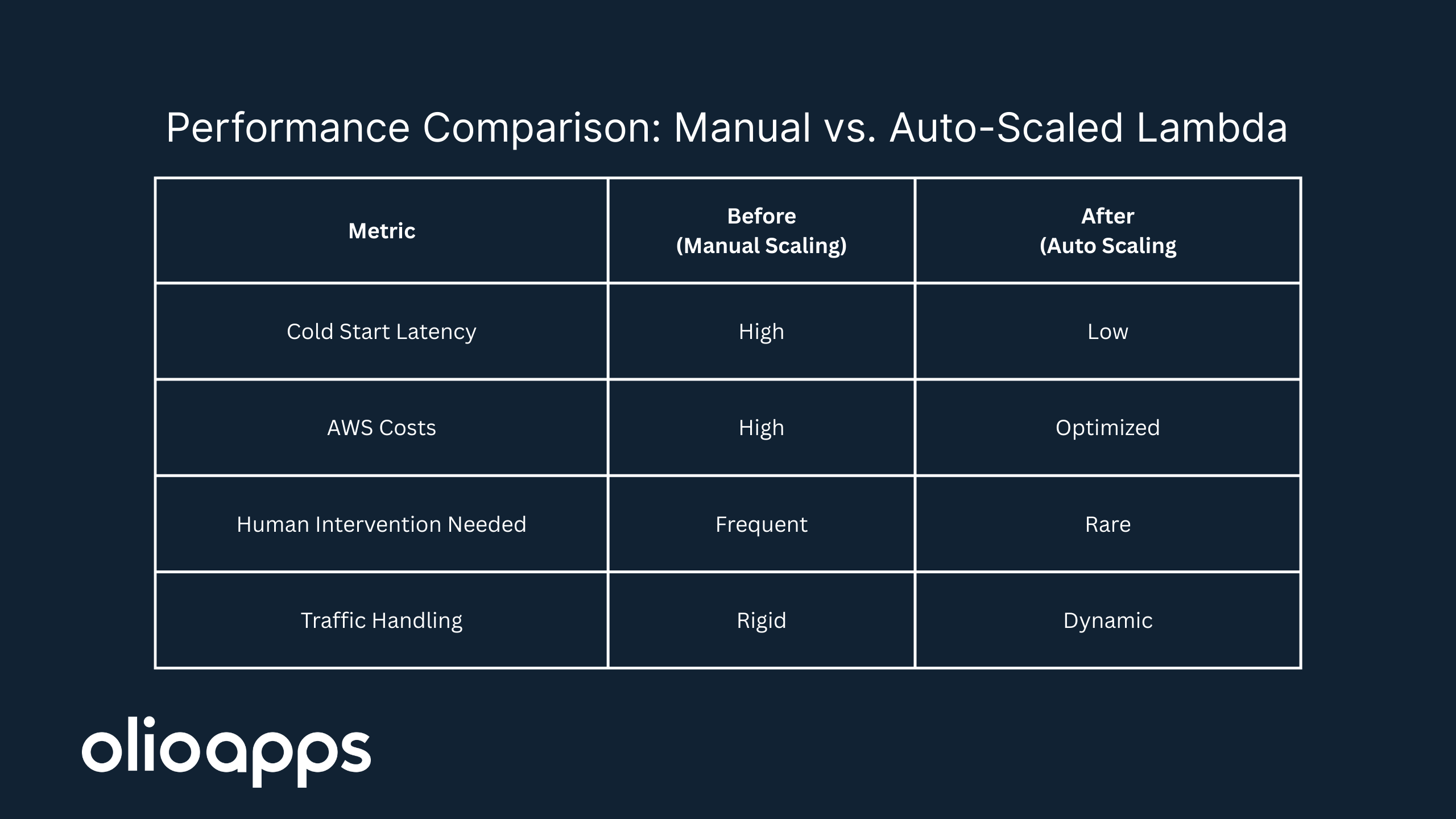

This technical refactor of our client's serverless architecture solved real business problems and alleviated serious developer painpoints, all while enabling their application to scale further. In summary, the core benefits were that now their application had:-

Reduced Cold Start Latency

With warm Lambdas available before traffic arrives, users no longer experienced delays during onboarding events or high-concurrency scenarios. -

Lower AWS Costs Without Sacrificing Performance

We eliminated the need to keep high concurrency levels running 24/7. The system automatically scales down during quiet periods, cutting unnecessary spend. -

Automation Replaced Human Guesswork

No more manual adjustments to concurrency settings. CloudWatch and Application Auto Scaling now handle scaling based on real usage, reducing operational overhead and human error. -

Infrastructure That Grows with Demand

The system now scales with actual traffic patterns. Whether we have 10 users or 10,000, Lambda concurrency adjusts dynamically to meet demand.

Tips for Implementing Auto Scaling with the Serverless Framework

If you’re looking to implement something similar, here are some key recommendations:-

Use the Serverless Framework with the

serverless-provisioned-concurrency-autoscalingplugin. - Define your min/max concurrency values clearly to prevent over- or under-scaling.

- Monitor with CloudWatch and adjust based on real traffic, not estimates.

- Tune your buffer and utilization targets based on observed load patterns.

Final Thoughts: Smarter Scaling, Lower Costs, and Better Performance

By combining AWS Lambda provisioned concurrency with auto scaling, and isolating our environments, we created a system that is more resilient, cost-effective, and scalable. If your team is manually adjusting concurrency or struggling with cold start latency, this pattern could save you time, money, and headaches. Want a more detailed walkthrough or help applying this in your stack? Get in touch here.Resources Mentioned

Scott Becker

CEO

Scott founded Olio Apps in 2012. He helps define the scope of customer engagements and oversees the business administration of Olio Apps. Scott’s areas of specialty are technical design, product design, and full stack engineering. Outside of work, he enjoys being with family, traveling to new places, playing music with others, and spending time in nature.

Share This Post

Popular Articles

Using AI To Create Website Color Themes

Emma Gerigscott

Facilitating Event Driven Architecture Evolution Using Amazon EventBridge

Dustin Herboldshimer

Pull Request Checklist

Aron Racho